Skirting bandwidth and privacy concerns, Apical’s smart motion-sensor technology enables behavioral recognition without invasive video analytics.

Traditional security sensors can tell you if motion is detected, and may go so far as to determine if the mover is a person, a dog or branches swaying in the wind. But that’s pretty much it.

For more data on the activity, and for richer integration with home automation systems, you would step up to video analytics or wearables. Video analytics can help observers track objects, count people, recognize faces and even ascertain emotions. Wearables (as well as tags for objects) can more easily enable personal recognition and activity tracking.

Both of these solutions can be pricey and/or invasive. Furthermore, they can consume large amounts of data and require cloud-based processing.

A new sensor technology, Apical Resident Technology (ART) from London-based Apical Ltd., enables much of the functionality of advanced video analytics and wearables, but without the bandwidth requirements and invasion of privacy.

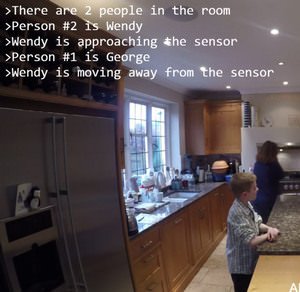

The technology, which might be likened to a ‘very very good PIR’ or ‘video analytics without the video,’ looks at ‘pixel disruption’ in light streams to decipher activity, according to Paul Strzelecki, a business consultant for Apical Limited.

In fact, Apical’s legacy is in the video processing, with its technology embedded in many a smart phone and surveillance camera. The new ART technology employs image sensors like you might find in cameras, but extracts only the data, not the images, thus reducing bandwidth requirements and dashing privacy concerns.

As with video analytics, the ART technology is so accurate, says Strzelecki, that it can ‘read’ relationships between people as distant or intimate, for example. It can determine based on body language if a person might be threatening or in medical distress.

Speaking at Smart Home World 2015 in London this week, Apical CEO Michael Tusch explains that ART distills data into four buckets: trajectory, pose, gesture and identity. With that data, Apical’s technology can 1) read people and behavior, 2) learn it and 3) generate events based on the data.

For example, if an unknown person takes an unusual path across the front lawn, a system might trigger the sprinklers to turn on.

Over time, as a sensor network learns activities and behaviors in a household, a ‘system’ becomes even more robust. It can potentially detect early stages of Alzheimer’s disease based on very precise movements such as a person stopping mid-way to the kitchen, pausing and reversing direction.

The sensors can create a ‘behavioral footprint,’ which Strzelecki calls BNA.

In a more typical security application, the Apical technology can reduce false alarms ‘almost to zero,’ says Tusch, because it knows who should be where when-even if an individual isn’t carrying a phone or wearing a magic bracelet.

And all of this happens ‘entirely at the edge, at low power and low cost,’ Tusch says, who adds that raw data is captured continuously in real time at 60 fps.

Again, this type of tracking is readily available today through video analytics or so long as the individual wears some kind of device and (typically) the cloud is involved. But Apical requires so little processing power and bandwidth that information can be processed and stored locally at consumer-friendly prices.

‘None of the information needs to go outside the house,’ Strzelecki says. ‘If it’s in the hardware, it can be processed on the fly.’

Imagine, for example, installing Apical-enabled sensors in the bathroom, where privacy is of utmost concern and individuals are apt to strip off wearables before a shower. An Apical-enabled sensor could nevertheless detect a fall or unusual activity.

Strzelecki says the Apical technology will be embedded into chipsets and ready for deployment this year. Sensors with the technology should cost little more than traditional PIRs, he says.

Tusch adds that Apical soon will announce a major partnership with a U.S. camera manufacturer.

Here’s how Apical describes its ART technology:

ART provides this coherent representation to the ecosystem of devices in the smart home, enabling them to respond naturally to people, interpret and anticipate their intent, provide authentication and parental control, and learn their behaviours and needs. In the process ART becomes the faithful, digital friend enhancing our daily living experience by putting us the users fully in control.

ART achieves this via Apical’s breakthrough machine intelligence technology called Spirit, which is embedded into one or more networked sensors in the home. Spirit is a chip-level technology, which converts raw image sensor data into a stream of virtualized object data, translating the scene into machine-readable form. It does this at-the-edge and in real time. It brings the kind of intelligence previously available only on supercomputers into a tiny form factor, which can be deployed in any internet-of-things device. It does this without forming any imagery or video, providing an absolute protection against any entity looking into the home.